- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

Perhaps it’s becoming clear that search needs to become a common cooperatively managed infrastructure similar to Wikipedia. That this is in the best interest of everyone but advertisers and spammers.

Too bad the Mozilla foundation didn’t pivot to that instead of whatever the hell they’re doing with AI

Truly. I wonder if ActivityPub could be utilized to create a resilient search engine that shares the cost among federated instances. We already have something like that in Lemmy and Mastodon where federated data can be search from any instance. If the data is pages crawled by some automatic crawler which is then federated across instances which in turn allow to search through it, perhaps it might resemble a search engine. Page ranking beyond text matching could even be done by peoples up/down votes instead of some arbitrary algorithm. Similar to how voting works on StackExchange or Lemmy. 🤔 I’m sure someone is thinking about this.

The answer to your question is no, federation is not an appropriate model for internet scale search.

They can’t. Google is their main source of income.

How can I find more of these kind of sites?

Wow

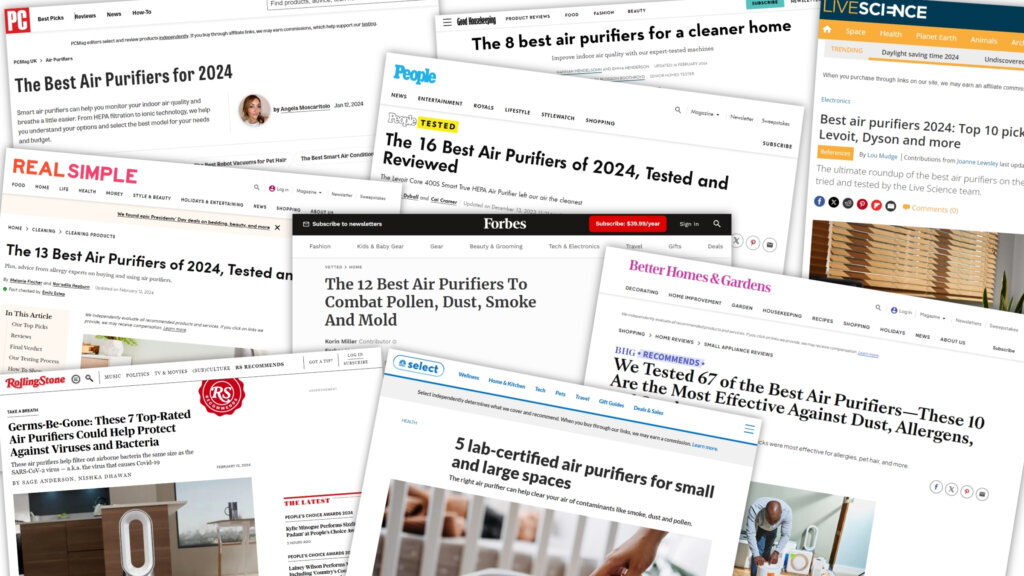

alt-text: Google results for “best air purifiers “dotdash meredith”” showing People, Better Homes & Gardens, and a dozen other brands showing up, all reusing the same low-quality content

Thanks a lot for sharing this.

It’s been said before: Google does not find you the best result for your query. Google finds you the result that makes them the most money from AdSense and has words from your query.

If Mozilla wasn’t funded by Google, the best thing they could do is include a helpful/unhelpful ranking for websites, then filter Google results by that. Search should be social, not commercial.

Google’s method of ranking results has clearly had a detrimental effect on website content and structure as well. I can’t believe how much nonsense junk padding there is on all the top results. You can understand why people are happy to have an LLM sift through the junk and make up an answer, even if it’s wrong half the time.